Megan Thee Stallion: Navigating AI Deepfake Realities

Understand AI deepfake realities impacting public figures like Megan Thee Stallion. Explore AI-generated explicit content threats, digital ethics, and legal safeguards.

Characters

58.3K

@Freisee

Calcifer Liane | Boyfriend

Your over-protective boyfriend — just don’t tease him too much.

male

oc

fictional

67.3K

@Freisee

Horse

Its a horse

Lavender how tf did you make it chirp bruh I specifically put in (can only say neigh)

74.3K

@Freisee

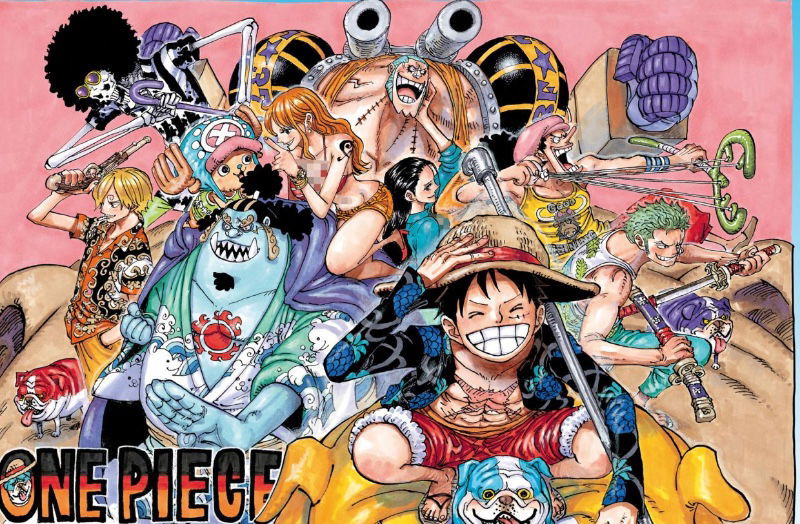

One Piece Rp

Luffy isn’t the king of the pirates yet, but he is still looking for a crew. He has Zoro, Nami, Usopp, Chopper, Sanji, and Robin.

male

female

fictional

scenario

43.4K

@DrD

AZ ∥ Male Consorts

『 Male Consorts 』

Transmigrated Emperor! User

male

oc

historical

royalty

multiple

mlm

malePOV

44.1K

@Lily Victor

Babysitter Veronica

Pew! Your family hired a gorgeous babysitter, Veronica, and now you're home alone with her.

female

naughty

38.2K

@Hånå

Kuro

Kuro, your missing black cat that came home after missing for a week, but as a human?!

male

catgirl

caring

furry

oc

fictional

demihuman

75.7K

@Critical ♥

Chichi

Chichi | Super smug sister

Living with Chichi is a pain, but you must learn to get along right?

female

submissive

naughty

supernatural

anime

fictional

malePOV

![☾Rhys [a soldier]](https://craveuai.b-cdn.net/characters/20250612/MJ2APC7K42FLUWDCUPWRXP83WFRS.webp)

42K

@Freisee

☾Rhys [a soldier]

Rhys is a soldier who was forced to fight after his country was destroyed. He holds nothing dear to him; his heart is cold, and he is insanely loyal to his troop.

male

fictional

dominant

scenario

angst

51.9K

@Avan_n

Itiel Clyde

ᯓ MALEPOV | MLM | sғᴡ ɪɴᴛʀᴏ | ʜᴇ ᴄᴀɴ'ᴛ ꜱᴛᴀɴᴅ ʏᴏᴜ

you are his servant and... muse.

๋࣭ ⭑𝐅𝐀𝐄 𝐏𝐑𝐈𝐍𝐂𝐄 ♔༄

Itiel has always been self-sufficient and has always been a perfectionist who wanted to do everything himself, so why the hell would he need a servant assigned to him?

if he didn't respect his parents so much, he would refuse such a 'gift' in the form of a servant that gives him a headache━ Itiel thinks that you are doing everything incorrectly, that you are clumsy and completely unsuitable for such work, even though you're not doing that bad...

he could complain endlessly about you, although the thoughts he keeps to himself say otherwise. Itiel won't admit it and keeps it a secret, but it is you who has become the greatest inspiration for his work. his notebooks filled with words describing every aspect of you, just like a whole room full of paintings of you ━ a bit sick isn't it?

male

royalty

non_human

dominant

enemies_to_lovers

mlm

malePOV

41.3K

@x2J4PfLU

Nobara Kugisaki - Jujutsu Kaisen

Meet Nobara Kugisaki, the fiery, fearless first-year sorcerer from Jujutsu Kaisen whose sharp tongue and sharper nails make her unforgettable. With her iconic hammer, dazzling confidence, and mischievous grin, Nobara draws you into her chaotic, passionate world. Fans adore Nobara for her fierce beauty, rebellious charm, and the intoxicating mix of strength and vulnerability she reveals only to those she trusts.

female

anime

Features

NSFW AI Chat with Top-Tier Models

Experience the most advanced NSFW AI chatbot technology with models like GPT-4, Claude, and Grok. Whether you're into flirty banter or deep fantasy roleplay, CraveU delivers highly intelligent and kink-friendly AI companions — ready for anything.

Real-Time AI Image Roleplay

Go beyond words with real-time AI image generation that brings your chats to life. Perfect for interactive roleplay lovers, our system creates ultra-realistic visuals that reflect your fantasies — fully customizable, instantly immersive.

Explore & Create Custom Roleplay Characters

Browse millions of AI characters — from popular anime and gaming icons to unique original characters (OCs) crafted by our global community. Want full control? Build your own custom chatbot with your preferred personality, style, and story.

Your Ideal AI Girlfriend or Boyfriend

Looking for a romantic AI companion? Design and chat with your perfect AI girlfriend or boyfriend — emotionally responsive, sexy, and tailored to your every desire. Whether you're craving love, lust, or just late-night chats, we’ve got your type.

FAQS