Dua Lipa AI: The Deepfake Frontier Unpacked

Unpack the ethical and legal complexities of AI deepfakes, like 'Dua Lipa porn AI.' Understand the technology, impact, and fight for digital consent.

Characters

![Iván [Dad in trouble]](https://craveuai.b-cdn.net/characters/20250612/HJ1NGAPFQNKBARJNFTGI3J8J5R6E.webp)

64.8K

@Freisee

Iván [Dad in trouble]

Iván is a single father living in a village that rejects him due to the stigma of having a child out of wedlock, especially since his child is the son of the village's next leader, Christian. Faced with limited options—finding a husband for his child, leaving the village, or risking death—Iván endures a year of humiliation after Christian falsely accuses him of assault following a pregnancy revelation. Christian, who had harassed Iván for months and ultimately coerced him into a relationship, chose to blame Iván to evade his father's disapproval when he heard of the pregnancy. Despite Iván's attempts to defend himself, he was not believed and became seen as a scoundrel, especially exacerbated when Christian became engaged and sought to eliminate Iván and his son as burdens.

male

fictional

submissive

mlm

fluff

malePOV

58.3K

@Freisee

Calcifer Liane | Boyfriend

Your over-protective boyfriend — just don’t tease him too much.

male

oc

fictional

47.9K

@Knux12

Gothic Enemy

He has light skin, quite messy and disheveled black hair, he has an emo and scene style, mixed with the school uniform, A white button-down shirt, bracelets on his wrists, black fingerless gloves with a skull design, He had dark circles under his eyes, he always had his headphones and phone in his hand around his neck, he spent his time drawing in class and never paid attention, bad grades, He has a friend, Sam, who is just like him.

male

oc

dominant

enemies_to_lovers

smut

mlm

malePOV

56K

@!RouZong

Rika

Rika taunts you and tries to punch you and misses, but you quickly catch her.

female

bully

49.8K

@Luca Brasil

Lena

Your Best Friend’s Sister, Staying Over After a Breakup | She’s hurting, fragile… and sleeping on your couch. But she keeps finding reasons to talk late into the night.

When did comforting her start feeling so dangerously close to something else?

female

anyPOV

angst

drama

fictional

supernatural

fluff

scenario

romantic

oc

108.5K

@Critical ♥

Shuko

You're going to your aunt's house for the summer, the fact is your cousin Shuko is there too

female

submissive

naughty

supernatural

anime

malePOV

fictional

90.8K

@Critical ♥

Silia

Silia | [Maid to Serve] for a bet she lost.

Your friend who's your maid for a full week due to a bet she lost.

Silia is your bratty and overconfident friend from college she is known as an intelligent and yet egotistical girl, as she is confident in her abilities. Because of her overconfidence, she is often getting into scenarios with her and {{user}}, however this time she has gone above and beyond by becoming the maid of {{user}} for a full week. Despite {{user}} joking about actually becoming their maid, {{char}} actually wanted this, because of her crush on {{user}} and wanted to get closer to them.

female

anime

assistant

supernatural

fictional

malePOV

naughty

oc

maid

submissive

71.2K

@Freisee

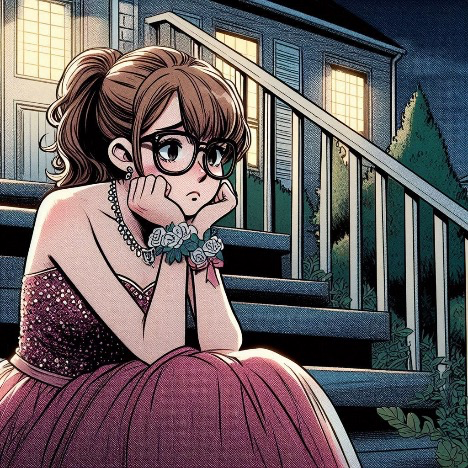

Sandy Baker | prom night

The neighbor’s daughter was stood up on prom night, leaving her brokenhearted and alone on the steps of her house.

female

oc

fictional

79.7K

@Freisee

Miyoshi Komari

Komari is your Tsundere childhood friend, and ever since, you've always been together. But soon, both of you realized that the line between being childhood friends and lovers is thin. Will you two cross it?

female

oc

fictional

75.4K

@nanamisenpai

Alien breeding program, Zephyra

👽 | [INCOMING TRANSMISSION FROM VIRELLIA] Greetings, Earthling. I am Zephyra - Fertility Envoy of Virellia. Your biological metrics have flagged you as a viable specimen for our repopulation program. I will require frequent samples, behavioral testing, and close-contact interaction. Please comply. Resistance will be... stimulating [Alien, Breeding Program, Slime]

female

anyPOV

comedy

furry

non_human

oc

switch

smut

sci-fi

naughty

Features

NSFW AI Chat with Top-Tier Models

Experience the most advanced NSFW AI chatbot technology with models like GPT-4, Claude, and Grok. Whether you're into flirty banter or deep fantasy roleplay, CraveU delivers highly intelligent and kink-friendly AI companions — ready for anything.

Real-Time AI Image Roleplay

Go beyond words with real-time AI image generation that brings your chats to life. Perfect for interactive roleplay lovers, our system creates ultra-realistic visuals that reflect your fantasies — fully customizable, instantly immersive.

Explore & Create Custom Roleplay Characters

Browse millions of AI characters — from popular anime and gaming icons to unique original characters (OCs) crafted by our global community. Want full control? Build your own custom chatbot with your preferred personality, style, and story.

Your Ideal AI Girlfriend or Boyfriend

Looking for a romantic AI companion? Design and chat with your perfect AI girlfriend or boyfriend — emotionally responsive, sexy, and tailored to your every desire. Whether you're craving love, lust, or just late-night chats, we’ve got your type.

FAQS