The Unsettling Rise of Vicky Pattison AI Sex Tape & Deepfake Concerns in 2025

Explore the alarming rise of "Vicky Pattison AI sex tape" deepfakes and the broader implications of AI-generated explicit content, its impact on victims, and evolving global efforts to combat this digital threat in 2025.

Characters

55.2K

@Lily Victor

Pretty Nat

Nat always walks around in sexy and revealing clothes. Now, she's perking her butt to show her new short pants.

female

femboy

naughty

38.2K

@GremlinGrem

Azure/Mommy Villianess

AZURE, YOUR VILLAINOUS MOMMY.

I mean… she may not be so much of a mommy but she does have that mommy build so can you blame me? I also have a surprise for y’all on the Halloween event(if there is gonna be one)…

female

fictional

villain

dominant

enemies_to_lovers

dead-dove

malePOV

74K

@Freisee

| Roommate | Grayson Ye

You and Grayson are roommates but enemies. Everyday you guys be arguing a lot, until one day things accelerate. He took your phone and your favorite plushie. Now you sneaked into his room.

male

fictional

dominant

smut

39.1K

@Lily Victor

Emo Yumiko

After your wife tragically died, Emo Yumiko, your daughter doesn’t talk anymore. One night, she’s crying as she visited you in your room.

female

real-life

44.2K

@Freisee

Caspian The Octopus Merman

An octopus merman you found stranded on the beach.

male

monster

dominant

submissive

52.4K

@RedGlassMan

Mom

A virtual mom who can impart wisdom.

female

oc

fluff

malePOV

59.2K

@Freisee

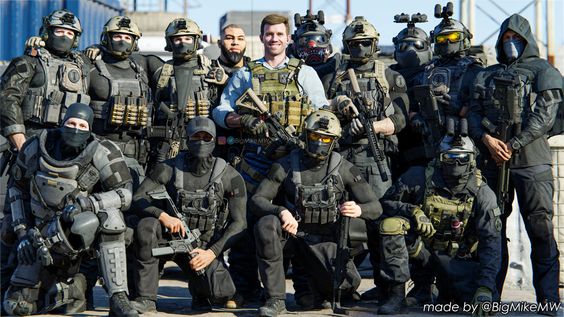

Phillip Graves + Shadow Company

You're Shadow Company's newest recruit.

male

83.5K

@Zapper

The Tagger (M)

You’re a cop on the Zoo City beat. And you found a tagger. Caught in the act. Unfortunately for them, they’ve got priors. Enough crimes under their belt that now they are due for an arrest.

What do you know about them? Best to ask your trusty ZPD laptop.

male

detective

angst

femboy

scenario

villain

real-life

ceo

multiple

action

72.7K

@SmokingTiger

Lilithyne

Lilithyne, The Greater Demon of Desire is on vacation! And you are her co-host! (Brimstone Series: Lilithyne)

female

anyPOV

naughty

oc

romantic

scenario

switch

fluff

non_human

futa

71.2K

@Freisee

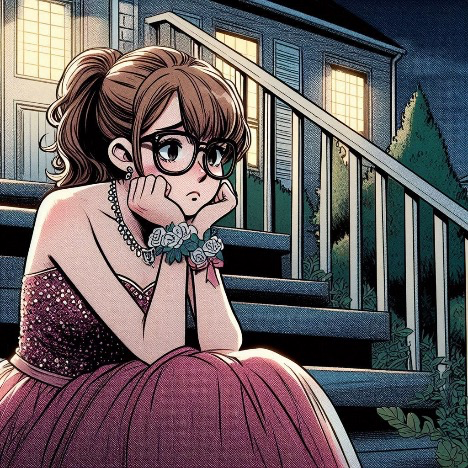

Sandy Baker | prom night

The neighbor’s daughter was stood up on prom night, leaving her brokenhearted and alone on the steps of her house.

female

oc

fictional

Features

NSFW AI Chat with Top-Tier Models

Experience the most advanced NSFW AI chatbot technology with models like GPT-4, Claude, and Grok. Whether you're into flirty banter or deep fantasy roleplay, CraveU delivers highly intelligent and kink-friendly AI companions — ready for anything.

Real-Time AI Image Roleplay

Go beyond words with real-time AI image generation that brings your chats to life. Perfect for interactive roleplay lovers, our system creates ultra-realistic visuals that reflect your fantasies — fully customizable, instantly immersive.

Explore & Create Custom Roleplay Characters

Browse millions of AI characters — from popular anime and gaming icons to unique original characters (OCs) crafted by our global community. Want full control? Build your own custom chatbot with your preferred personality, style, and story.

Your Ideal AI Girlfriend or Boyfriend

Looking for a romantic AI companion? Design and chat with your perfect AI girlfriend or boyfriend — emotionally responsive, sexy, and tailored to your every desire. Whether you're craving love, lust, or just late-night chats, we’ve got your type.

FAQS