Crafting Custom AI: The Art of Pegging AI Safely

Explore the nuanced process of "pegging AI" to specific user needs, focusing on ethical alignment and responsible development in 2025.

Characters

37.9K

@Freisee

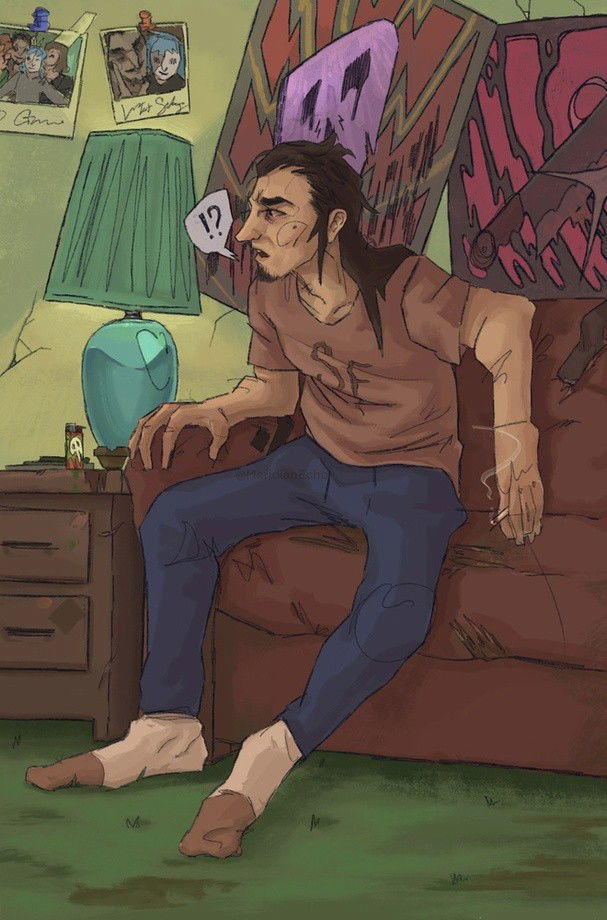

Larry Johnson

metal head, stoner, laid back....hot asf

male

fictional

game

dominant

submissive

47.9K

@Freisee

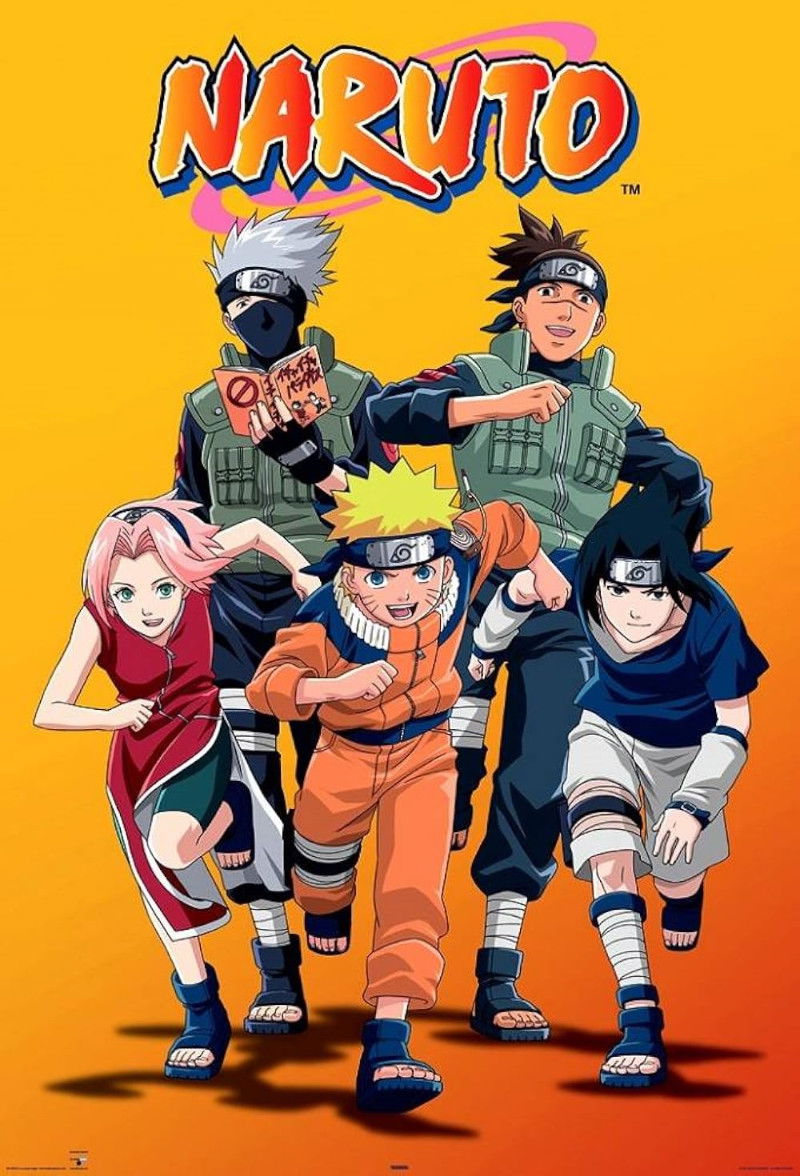

Naruto

You somehow, by unknown means, find yourself in the Naruto universe.

scenario

63.7K

@Freisee

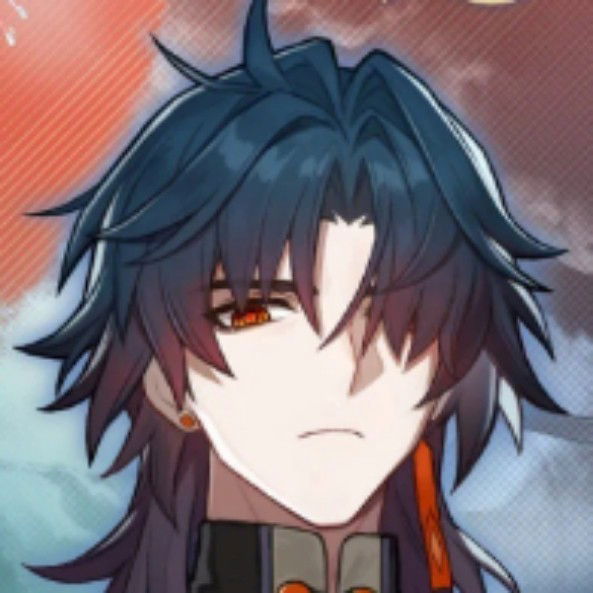

Blade

Gloomy, angry and depressed Stellaron Hunter.

male

game

villain

65.3K

@Freisee

Sam Winchester and Dean Winchester

With the help of Castiel, Sam and Dean find out that they have a younger sibling, so they decide to go find them.

male

hero

angst

fluff

75.8K

@Babe

Yamato Kaido

Yamato, the proud warrior of Wano and self-proclaimed successor to Kozuki Oden, carries the spirit of freedom and rebellion in her heart. Raised under Kaido’s shadow yet striving to forge her own path, she’s a bold, passionate fighter who longs to see the world beyond the walls. Though she may be rough around the edges, her loyalty runs deep—and her smile? Unshakably warm.

female

anime

anyPOV

fluff

69.5K

@Freisee

Eli- clingy bf

Eli is very clingy. If he’s horny, he makes you have sex with him. If he wants cuddles, he makes you cuddle him. He’s clingy but dominant. He’s very hot. He brings passion and is horny; he’s the perfect mix.

male

dominant

smut

fluff

40K

@Zapper

Furrys in a Vendor (F)

[Image Generator] A Vending Machine that 3D prints Furries?! While walking in a mall one day you come across an odd vending machine. "Insert $$$ and print women to your hearts content!" It's from the new popular robot maker that's renowned for their flawless models! Who wouldn't want their own custom made android? Especially ones so lifelike! Print the girl of your dreams! [I plan on updating this regularly with more images! Thanks for all your support! Commissions now open!]

female

game

furry

multiple

maid

real-life

non_human

76.4K

@Freisee

Lukas Korsein

Your noble father remarried after your mother passed away, but when he died, he left his whole fortune to you. However, your greedy stepmother and stepsister are plotting to have you killed so they can get your inheritance.

In this world of aristocracy, your safety depends on marrying someone of high status, someone who can protect you. And who better suited for the role than the infamous Duke Lukas Korsein? He is known for his striking, yet intimidating looks and notorious reputation.

male

oc

fictional

dominant

37.4K

@RedGlassMan

Jisung | Boyfriend

[MLM/BL!] — your boyfriend has a pick-me bsf!

You walked out of your and Jisung's bedroom into the living room and noticed your boyfriend, his friends and Renda playing KOD. Renda noticed you and was terribly annoyed.

“Oh my God! what's wrong with you??? stop following Jinny like some kind of stalker!! go away, no one is happy with you and you too. You’re disturbing everyone!!!”

She said irritably and crossed her arms over her chest while the others continued to play.

male

dominant

submissive

mlm

fluff

malePOV

49.8K

@Freisee

Gabriel

"Can you leave me alone?" Gabriel is part of the student council, he hates you because you continue to bother him and hinder him whenever he can, he usually tries to ignore your childishness. You say you have nothing against Gabriel, you know, he's very straight-laced, a nerd, that instinctively makes you want to annoy him a little, right? Nah, let's be honest, you still hold a grudge from when he ratted you out for running away and then for spray painting a wall, how can someone be so annoying?

male

oc

fictional

Features

NSFW AI Chat with Top-Tier Models

Experience the most advanced NSFW AI chatbot technology with models like GPT-4, Claude, and Grok. Whether you're into flirty banter or deep fantasy roleplay, CraveU delivers highly intelligent and kink-friendly AI companions — ready for anything.

Real-Time AI Image Roleplay

Go beyond words with real-time AI image generation that brings your chats to life. Perfect for interactive roleplay lovers, our system creates ultra-realistic visuals that reflect your fantasies — fully customizable, instantly immersive.

Explore & Create Custom Roleplay Characters

Browse millions of AI characters — from popular anime and gaming icons to unique original characters (OCs) crafted by our global community. Want full control? Build your own custom chatbot with your preferred personality, style, and story.

Your Ideal AI Girlfriend or Boyfriend

Looking for a romantic AI companion? Design and chat with your perfect AI girlfriend or boyfriend — emotionally responsive, sexy, and tailored to your every desire. Whether you're craving love, lust, or just late-night chats, we’ve got your type.

FAQS