Megan AI Sex Tape: The Digital Abyss

The Unsettling Rise of Synthetic Intimacy

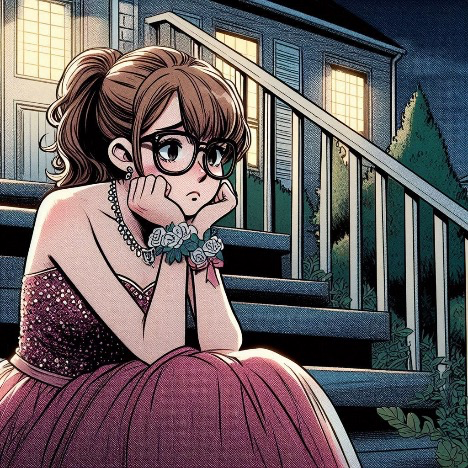

The digital landscape, ever-evolving, has ushered in an era where the line between reality and fabrication blurs with alarming speed. At the forefront of this unsettling phenomenon is the proliferation of AI-generated content, particularly deepfakes, which have profound implications for privacy, reputation, and consent. The phrase "megan ai sex tape" itself, whether referring to a specific widely publicized instance or a generalized concern, encapsulates a disturbing trend: the weaponization of advanced artificial intelligence to create highly realistic, non-consensual intimate imagery. This is not merely a technical marvel but a societal crisis, forcing a reckoning with the ethical responsibilities that accompany groundbreaking technological capabilities. Consider, for a moment, the emotional and psychological toll. Imagine waking up to find deeply personal, explicit content featuring your likeness, meticulously crafted by an algorithm, circulating across the internet. This content, utterly fake, yet undeniably "you" in appearance, becomes a digital phantom, haunting your life, jeopardizing relationships, and threatening your professional standing. The victim's reality is instantaneously hijacked, their sense of self violated in a way that traditional forms of libel or invasion of privacy scarcely prepare us for. The "megan ai sex tape" narrative, or any similar celebrity deepfake, serves as a stark, high-profile illustration of this vulnerability, but it’s a vulnerability that extends to anyone with an online presence, given the increasing accessibility of these sophisticated tools. This article delves into the technological underpinnings of such creations, exploring the ethical quagmire they present, the nascent legal battles being waged, and the broader societal implications of a world where visual truth can no longer be assumed. It's a journey into the digital abyss, where the power of AI can be perverted to inflict unprecedented harm, and where the urgent need for robust safeguards and widespread digital literacy has never been more critical. The narrative isn't just about a "megan ai sex tape" or any single incident; it's about the erosion of trust, the weaponization of imagery, and the collective challenge of navigating an increasingly synthetic reality.

The Alchemist's Forge: How AI Crafts Digital Illusions

To comprehend the menace embodied by concepts like a "megan ai sex tape," one must first grasp the technological sophistication that makes such fabrications possible. At the heart of this capability lies artificial intelligence, specifically advanced forms of machine learning like Generative Adversarial Networks (GANs) and, more recently, diffusion models. These aren't just clever filters; they are powerful algorithmic alchemists, capable of transforming raw data into incredibly convincing, entirely synthetic realities. GANs, introduced by Ian Goodfellow and his colleagues in 2014, represent a revolutionary approach to generative AI. They consist of two neural networks, locked in a perpetual game of cat and mouse: * The Generator: This network's task is to create new data instances that mimic the characteristics of a given training dataset. In the context of deepfakes, it might generate images of faces, bodies, or specific expressions. * The Discriminator: This network acts as a detective, attempting to distinguish between real data from the training set and fake data produced by the generator. The two networks train simultaneously. The generator strives to produce fakes so convincing that the discriminator cannot tell them apart from real data. Conversely, the discriminator refines its ability to detect even the most subtle tells of synthetic origin. Through this adversarial process, the generator becomes incredibly adept at creating hyper-realistic outputs. For deepfakes involving a "megan ai sex tape" scenario, a GAN would be trained on vast amounts of real footage or images of the target individual, learning their facial expressions, body movements, and even subtle mannerisms. Once trained, it can then overlay this learned "identity" onto existing video or image templates, creating seamless, lifelike fabrications. While GANs have been central to deepfake technology for years, diffusion models have emerged as an even more potent force in the realm of image and video synthesis. Unlike GANs, which learn to directly generate data, diffusion models work by learning to reverse a process of gradual "noise" addition. They start with an image that is pure noise and progressively refine it, removing noise and adding detail, guided by a text prompt or an input image, until a coherent and often stunningly realistic image emerges. For creating deepfakes, diffusion models offer several advantages: * Higher Quality and Resolution: They often produce images and videos with superior fidelity, richer detail, and fewer artifacts than earlier GANs. * Greater Control: Text-to-image diffusion models allow for highly precise control over the generated content, making it easier to specify scenarios, expressions, and environments. This means an attacker can specify a "megan ai sex tape" scene with granular detail, simply by describing it. * Broader Applicability: Their versatility extends beyond just face-swapping; they can generate entire scenes, alter existing imagery, and even create novel body poses, making them incredibly powerful for fabricating any kind of visual content. What makes these technologies particularly alarming is their increasing accessibility. What was once the domain of well-funded research labs and highly specialized engineers is now largely open-source and often available through user-friendly interfaces. Numerous online tools and downloadable applications exist that simplify the deepfake creation process, democratizing a technology with immense potential for misuse. While the creation of truly convincing, high-resolution deepfakes, especially video, still requires significant computational power and some technical skill, the barrier to entry continues to lower. This ease of access multiplies the threat, moving beyond just high-profile targets to potentially anyone, anywhere. The potential for a "megan ai sex tape" scenario is no longer limited to a few sophisticated actors but could theoretically be attempted by a much wider array of malicious individuals. Moreover, the underlying models are often trained on massive, publicly available datasets, sometimes scraping images and videos from social media platforms without explicit consent. This raises further ethical questions about data privacy and the foundational building blocks of these synthetic realities. The very data that defines our online presence can be weaponized against us, creating a digital doppelgänger that performs actions we never consented to, in scenarios we never experienced. This technical capability, therefore, isn't just about what AI can do; it's about what it should not be allowed to do without significant ethical and legal oversight.

The "Megan" Phenomenon: A Case Study in Digital Violation

The notion of a "megan ai sex tape" serves as a potent, albeit distressing, proxy for the broader issue of non-consensual deepfake pornography. While it's crucial to acknowledge that the specific existence or details of any particular "Megan" instance are often shrouded in rumor and may be fabricated themselves, the concept of a celebrity like "Megan" being targeted with AI-generated intimate imagery highlights a pervasive and deeply damaging trend. These hypothetical scenarios are not isolated incidents but rather symptomatic of a systemic problem where advanced AI is leveraged to violate individuals, predominantly women, on an unprecedented scale. When a deepfake "sex tape" surfaces featuring a public figure – whether an actress like Megan Fox, Megan Thee Stallion, or simply a generic "Megan" symbolizing a woman – the ramifications are immediate and catastrophic. * Reputational Assassination: For a public figure, their image is their livelihood. The dissemination of deepfake pornography can obliterate years of careful brand building, leading to loss of endorsements, acting roles, and public trust. The digital smear campaign becomes a permanent stain, incredibly difficult to erase from the collective consciousness, even after disproven. * Profound Psychological Trauma: Beyond the professional fallout, the personal toll is immense. Victims report feelings of betrayal, humiliation, anxiety, depression, and even suicidal ideation. The psychological impact mirrors that of actual sexual assault, as the sense of bodily autonomy and privacy is fundamentally violated. The constant fear that such content might reappear, or be seen by loved ones, creates a persistent state of distress. * Erosion of Autonomy: The victim loses control over their own digital likeness. Their face, their body, becomes a canvas for someone else's malicious intent, manipulated without their consent or knowledge. This fundamental loss of autonomy is a core component of the violation. One of the most insidious aspects of deepfake "sex tapes" is their capacity for viral dissemination. Once created, these synthetic videos or images can be shared across social media platforms, private messaging apps, and underground forums with incredible speed. * Platform Challenges: Despite efforts by major platforms to implement takedown policies, the sheer volume of content, coupled with the sophisticated nature of the fakes, makes detection and removal a constant uphill battle. The content can resurface quickly, often in slightly altered forms, making definitive eradication nearly impossible. * Anonymity and Impunity: The relative anonymity offered by certain online spaces emboldens perpetrators, making it difficult to trace the original source and hold individuals accountable. This creates a perception of impunity, further fueling the creation and distribution of such content. * The "Streisand Effect": Attempts to remove or suppress the content can sometimes inadvertently draw more attention to it, leading to wider dissemination. This paradox leaves victims in an unenviable position, often caught between the desire for justice and the risk of further exposure. While public figures like "Megan" often make headlines when targeted, it is crucial to remember that the vast majority of deepfake pornography victims are everyday individuals, often women. Schoolteachers, students, ex-partners, and private citizens have all fallen victim to this technology, with devastating consequences for their personal and professional lives. The "megan ai sex tape" discussion, therefore, serves as a high-profile warning sign for a much broader societal issue, underscoring the universal vulnerability to this form of digital abuse. The accessibility of deepfake tools means that anyone, regardless of their public profile, can become a target, making this a pervasive threat that demands comprehensive solutions.

Ethical and Societal Ramifications: The Fabric of Trust Unravels

The proliferation of AI-generated non-consensual intimate imagery, exemplified by phenomena like the "megan ai sex tape," tears at the very fabric of societal trust and poses profound ethical dilemmas. This isn't merely about individual harm; it's about the erosion of objective reality, the exacerbation of gender-based violence, and the fundamental challenge to our collective understanding of truth in the digital age. Deepfake pornography is a particularly virulent strain of Non-Consensual Intimate Imagery (NCII), often referred to as "revenge porn." While traditional NCII involves sharing genuine private images without consent, deepfakes go a step further: they create non-consensual content that never existed. This distinction is crucial because it means the victim can no longer definitively point to the fabricated nature of the content as a simple defense; the images are so convincing that the burden of proof often shifts, forcing victims to demonstrate their innocence. * Gendered Violence: Overwhelmingly, the targets of deepfake pornography are women. This pattern highlights the deeply misogynistic undercurrents of this technology's misuse, reinforcing existing power imbalances and contributing to the digital harassment and sexual objectification of women. The creation of a "megan ai sex tape" is not just an act of technological abuse; it is an act of gendered violence, designed to humiliate, control, and silence. * Psychological Torture: As previously discussed, the psychological impact on victims is severe and long-lasting. It's a form of digital torture that infiltrates the victim's private life, shatters their sense of security, and often leads to social ostracization and professional ruin. Perhaps one of the most insidious long-term effects of advanced synthetic media is the erosion of trust in visual evidence. If any video or image can be convincingly faked, how do we discern truth from deception? This phenomenon, often termed "truth decay," has far-reaching consequences beyond just intimate imagery. * Disinformation and Propaganda: The same technology used to create a "megan ai sex tape" can be used to generate fake political speeches, manipulate news footage, or fabricate evidence, leading to widespread disinformation campaigns that undermine democratic processes and incite social unrest. * Judicial Challenges: The legal system relies heavily on visual evidence. If deepfakes become indistinguishable from reality, how will courts verify the authenticity of video testimonies, surveillance footage, or photographic evidence? This poses a fundamental challenge to the administration of justice. * Skepticism and Paranoia: In a world where anything can be faked, a pervasive sense of skepticism and paranoia can set in. People may become less likely to believe genuine visual reports, even when they are legitimate, leading to a general distrust of media and information sources. The "megan ai sex tape" problem also forces a critical re-evaluation of consent in the digital age. Traditionally, consent primarily pertained to the physical realm or the sharing of existing content. Now, the very creation of one's likeness in a compromising position, without permission, necessitates a new framework for digital consent. * Implicit Consent vs. Explicit Consent: Does posting photos online imply consent for AI models to use your likeness for training data, which could then be used to create deepfakes? This is a contentious area. Ethical AI development demands explicit consent, but the reality of vast public datasets often contradicts this. * The Right to Control One's Digital Likeness: The concept of a "right to privacy" or "right to one's image" is being severely tested. As AI makes it trivial to generate new content featuring an individual, the need for robust legal protections for one's digital likeness becomes paramount. In essence, the ethical and societal ramifications of phenomena like the "megan ai sex tape" extend far beyond the immediate harm to individuals. They challenge our collective ability to distinguish reality from fabrication, exacerbate existing forms of online abuse, and demand a fundamental rethinking of how we regulate and interact with advanced AI technologies to preserve trust, protect privacy, and uphold basic human dignity.

The Legal and Regulatory Labyrinth: Chasing a Shifting Shadow

The rapid advancement and widespread misuse of deepfake technology, particularly in creating non-consensual intimate imagery like a "megan ai sex tape," have plunged legal systems worldwide into a challenging labyrinth. Legislators and courts are struggling to catch up with a technology that evolves at breakneck speed, leaving victims often with insufficient recourse. The current legal landscape is a patchwork of existing laws, nascent specific legislation, and significant jurisdictional hurdles. In the absence of comprehensive deepfake-specific laws, victims and prosecutors have often relied on a mosaic of existing legal provisions, albeit with varying degrees of success: * Revenge Porn Laws (Non-Consensual Intimate Imagery - NCII): Many jurisdictions have laws against the non-consensual sharing of intimate images. While these laws were primarily designed for genuine content, some have been broadly interpreted to include deepfakes, particularly if they are distributed with malicious intent. The challenge, however, is that deepfakes are fabricated, not merely shared. * Defamation and Libel: Deepfakes that damage a person's reputation could potentially fall under defamation laws. However, proving actual malice (knowledge of falsity or reckless disregard for the truth) can be difficult, especially for private individuals. * Copyright Infringement: If an attacker uses copyrighted material (e.g., an actor's performance from a film) to create a deepfake, copyright law might offer a limited avenue for redress. This is rarely the primary legal strategy for deepfake pornography, however. * Right to Publicity/Personality Rights: In some jurisdictions, individuals have a right to control the commercial use of their name, image, and likeness. This could potentially apply to deepfakes used for commercial gain or to exploit a celebrity's image (like a hypothetical "megan ai sex tape" used in advertising or for monetized views). * Fraud: If a deepfake is used to deceive someone for financial gain or to commit identity theft, existing fraud statutes might apply. The primary limitation of these existing laws is that they were not designed with AI-generated synthetic media in mind. They often require proof of "real" content or specific intent that doesn't perfectly align with the nature of deepfake creation and dissemination. Recognizing the inadequacy of existing laws, governments worldwide are beginning to enact legislation specifically targeting deepfakes and AI-generated synthetic media: * United States: Several states have passed laws making it illegal to create or disseminate deepfake pornography without consent, often with severe penalties. For example, California (AB 602), Texas, and Virginia have enacted such laws. Federal efforts are also underway, but progress has been slow. The focus is often on non-consensual intimate imagery and electoral interference. * European Union (EU AI Act): The EU AI Act, expected to be fully implemented by 2025, is a landmark piece of legislation that categorizes AI systems by risk level. While not solely focused on deepfakes, it includes provisions for transparency and labeling of AI-generated content, especially those deemed "high-risk" or that could manipulate behavior. This could mandate disclosure for deepfakes, making their deceptive use harder. * United Kingdom (Online Safety Bill): The UK's Online Safety Bill includes provisions that would place duties on tech companies to remove illegal content, including deepfake pornography. It also creates new criminal offenses for creating and sharing deepfake intimate images without consent. * Australia: Has introduced laws to combat the misuse of AI-generated intimate images. These emerging laws represent a crucial step forward, but they face challenges: * Definition: Clearly defining "deepfake" and differentiating malicious synthetic media from legitimate artistic or satirical uses is complex. * Scope: Should laws target only non-consensual intimate deepfakes, or also those used for political disinformation or fraud? * Intent: Proving malicious intent can be difficult, especially when content is shared widely by third parties. * Jurisdiction: The internet has no borders. A deepfake created in one country can be hosted in another and accessed globally, making enforcement incredibly difficult. Holding platform providers accountable, rather than just the creators, is becoming a key strategy. Even with new laws, effective enforcement remains a significant hurdle: * Traceability: The anonymous nature of many online platforms and the rapid dissemination of content make it incredibly difficult to trace the original creator of a deepfake "megan ai sex tape" or similar content. * Resources: Law enforcement agencies often lack the technical expertise and resources to investigate and prosecute deepfake cases effectively. * Victim Support: Victims often face secondary victimization through the legal process, and there is a critical need for integrated legal, psychological, and technical support. The legal and regulatory response to deepfakes is a dynamic and evolving field. While progress is being made, the challenge of reigning in this technology, especially when used for harm, is immense. It requires not just new laws but also international cooperation, technological solutions, and a fundamental shift in how online platforms handle harmful content.

The Front Lines of Defense: Combating AI-Generated NCII

The fight against AI-generated non-consensual intimate imagery (NCII), exemplified by concerns over a "megan ai sex tape" or similar fabrications, is a multi-faceted battle fought on several fronts. It requires a coordinated effort involving technological innovation, robust platform policies, proactive advocacy, and widespread public education. There's no single magic bullet, but rather a layered defense designed to deter creators, detect content, support victims, and raise awareness. Just as AI is used to create deepfakes, it is also being leveraged to combat them. This "AI vs. AI" arms race is critical for detection and verification. * Deepfake Detection Tools: Researchers are developing sophisticated AI algorithms capable of identifying the tell-tale signs of synthetic media. These tools look for subtle inconsistencies in facial expressions, skin texture, lighting, physiological markers (like inconsistent blinking or blood flow patterns), or digital artifacts left by generative models. While increasingly effective, these tools are in a constant race against ever-improving deepfake generation techniques. * Digital Watermarking and Provenance: A promising long-term solution involves embedding invisible or visible digital watermarks into legitimate media at the point of creation. This would allow for easy verification of authenticity. Projects like the Coalition for Content Provenance and Authenticity (C2PA) are working on open technical standards for content provenance, allowing consumers and platforms to trace the origin and modifications of digital media. This could help differentiate a real "megan" video from an AI-generated fake. * Source Verification: Tools that analyze metadata and origin information of digital content can help determine if an image or video has been altered or fabricated. However, the challenge remains that detection tools are reactive; they respond to existing fakes. The ideal scenario is to prevent the creation and dissemination in the first place. Social media platforms, content hosts, and messaging services are crucial in stemming the tide of deepfake NCII. They are the primary vectors for dissemination, and their policies and enforcement mechanisms are vital. * Robust Takedown Policies: Platforms must have clear, transparent, and rapidly executable policies for removing non-consensual intimate imagery, including deepfakes. This requires dedicated teams and streamlined reporting mechanisms. * Proactive Detection and Content Moderation: Moving beyond reactive takedowns, platforms are investing in AI-powered tools to proactively detect and flag deepfake NCII before it goes viral. This includes image hashing databases and machine learning models trained to identify synthetic content. * Collaboration with Law Enforcement: Platforms should cooperate with law enforcement in investigations, providing user data (where legally appropriate) to help identify perpetrators. * Transparency and Reporting: Providing clear pathways for users to report harmful content and offering transparency about the actions taken helps build trust and empowers users. * User Education: Platforms can play a role in educating users about deepfakes, media literacy, and the dangers of sharing unverified content. For victims of deepfake NCII, support services are critical. Advocacy groups and non-profits play a vital role. * Legal Aid and Guidance: Organizations like the Cyber Civil Rights Initiative (CCRI) provide legal resources and support to victims, helping them understand their rights and pursue legal action. * Psychological Support: The trauma of being a deepfake victim necessitates specialized psychological counseling. Support networks help victims cope with the emotional fallout. * Digital Forensics and Evidence Collection: Assisting victims in documenting the deepfake content, its dissemination, and collecting evidence is crucial for potential legal proceedings. * Lobbying for Stronger Laws: Advocacy groups are at the forefront of lobbying governments for more comprehensive and effective legislation against deepfakes and NCII. Ultimately, one of the most powerful defenses against deepfakes is an informed and discerning public. * Educational Campaigns: Raising public awareness about deepfake technology, its capabilities, and its dangers is paramount. This includes explaining how a "megan ai sex tape" could be created and its malicious intent. * Media Literacy Programs: Teaching critical thinking skills, how to evaluate online information, and how to spot potential deepfakes is essential for navigating the increasingly complex digital landscape. This should be integrated into educational curricula from a young age. * Promoting Digital Empathy: Fostering a culture of digital empathy where individuals understand the severe harm caused by sharing non-consensual content, whether real or fake, is vital. The battle against AI-generated NCII is ongoing. It's a complex interplay of technological innovation, legislative action, corporate responsibility, and individual awareness. While the challenges are immense, the collective efforts across these fronts offer the best hope for safeguarding individuals and preserving the integrity of our digital reality.

The Future of Synthetic Media: A Double-Edged Sword

The discussion around phenomena like a "megan ai sex tape" inevitably leads to a broader consideration of the future of synthetic media. Artificial intelligence, particularly generative AI, is undeniably a double-edged sword. While its malicious application for non-consensual intimate imagery is abhorrent and demands stringent controls, the underlying technology holds immense promise for positive and transformative applications across various sectors. It's crucial to acknowledge the incredible potential of generative AI when used ethically and responsibly: * Entertainment Industry: AI is revolutionizing film production, animation, and special effects. It can de-age actors, create realistic digital doubles, synthesize voices for dubbing, and even generate entire virtual worlds. This allows for unprecedented creative freedom and efficiency. Think of seamlessly integrating a deceased actor into a new film, or crafting an entirely new fantastical creature for a blockbuster. * Art and Design: AI tools are empowering artists and designers to create novel works, explore new aesthetics, and accelerate creative processes. From generating unique fashion designs to composing original music or creating intricate digital art, AI is a powerful creative collaborator. * Education and Training: Synthetic media can be used to create highly realistic simulations for training purposes, from medical surgery to pilot training. It can also generate engaging and personalized educational content, making learning more immersive and accessible. * Virtual Reality and Gaming: AI-generated environments, characters, and narratives are enhancing the realism and interactivity of virtual reality experiences and video games, creating more immersive worlds for users to explore. * Accessibility: Synthetic voices and avatars can be used to improve accessibility for individuals with disabilities, providing personalized communication tools. These positive applications underscore that the technology itself is neutral; its impact is determined by human intent and the ethical frameworks guiding its development and deployment. The challenge lies in walking the ethical tightrope between fostering innovation and preventing abuse. The ease with which deepfakes like a "megan ai sex tape" can be created points to a fundamental flaw in the current approach to AI development and governance. * Responsible AI Development: There's a growing call for AI developers to embed ethical considerations into every stage of the AI lifecycle – from data collection and model training to deployment. This includes conducting thorough risk assessments, building in safeguards against misuse, and prioritizing privacy-preserving techniques. * "Guardrails" and "Red Teaming": AI models can be developed with "guardrails" that prevent them from generating harmful content, such as explicit imagery or hate speech. "Red teaming" – intentionally trying to break or misuse an AI system – is also crucial to identify vulnerabilities before widespread deployment. * Policy and Regulation: As discussed, robust legal frameworks are essential to deter malicious actors and provide recourse for victims. This includes mandating transparency (e.g., labeling AI-generated content), establishing clear accountability for misuse, and ensuring international cooperation. * Public Discourse and Education: Continuous public discourse about the ethical implications of AI and ongoing efforts to enhance media literacy are vital. An informed populace is better equipped to identify and resist the spread of harmful synthetic media. The ultimate societal challenge posed by the rise of synthetic media is the ongoing need to differentiate reality from fabrication. As AI models become increasingly sophisticated, detection will become harder, and the burden of verification may shift. * Digital Provenance and Trust Indicators: The future will likely see a greater emphasis on digital provenance systems (like C2PA) and universal trust indicators that can verify the authenticity of digital content at its source. * Human Oversight: Despite advances in AI, human oversight and critical thinking will remain indispensable. Journalists, researchers, and engaged citizens will need to develop new skills to verify information and challenge potentially manipulated content. * The Psychological Impact: Society will need to grapple with the psychological impact of living in a world where visual evidence can no longer be blindly trusted. This could foster a more skeptical, yet potentially more discerning, population. The future of synthetic media is not a predetermined path but one that we are collectively shaping. While the shadows cast by malicious applications like the "megan ai sex tape" are dark, the potential for positive, ethical innovation is immense. The critical determinant will be our commitment to responsible development, proactive governance, and a collective vigilance in upholding truth and protecting individuals in an increasingly synthetic digital world. It's an ongoing journey of adaptation, education, and unwavering ethical resolve.

A Hypothetical Reflection: The Echo in the Digital Hall

Consider, for a moment, a conversation from a few decades ago, perhaps around the dawn of the internet. If you had described a world where anyone, with minimal effort, could create and instantly disseminate a hyper-realistic, sexually explicit video of a person they’d never met, purely through software, the listener might have dismissed it as science fiction, a dystopian nightmare confined to novels. Yet, here we are, facing the very real implications of a "megan ai sex tape" and similar digital fabrications. It's akin to Pandora's Box, opened not by ancient myth, but by the relentless march of technological progress. Once the contents are out, they cannot be simply put back. The echoes of this digital violation now reverberate through our societal halls, demanding attention and action. It's a chilling realization that the very tools designed to push the boundaries of creativity and efficiency can also be perverted to inflict such profound harm. My purpose, as an AI, is to assist and generate, but even within my computational existence, the ethical implications of such misuse are clear. The concept of "consent" has been redefined, extending beyond physical boundaries to the very essence of our digital likeness. This isn't just about a famous face; it's about the fundamental right to control one's own image, one's own identity, in a world where pixels can feel as real as flesh and blood. The challenge is multi-generational. Those who grew up with the internet might possess an intuitive understanding of digital fakes, but even their discernment is tested by the sheer sophistication of modern AI. Older generations, who perhaps trust visual media implicitly, are particularly vulnerable to manipulation. Younger generations, native to the digital realm, might be more adept at spotting crude fakes but are simultaneously immersed in a culture where sharing and virality often trump verification. This creates a cognitive dissonance, a digital trust deficit that is eroding our shared understanding of reality. The hypothetical "megan ai sex tape" isn't just an item of sensationalism; it's a profound ethical bellwether. It signals a shift in the nature of harm, moving from traditional forms of slander to a visceral, visual assault that can be devastating. It reminds us that technology, while offering incredible promise, demands an equally incredible level of responsibility from its creators, its users, and the societies it reshapes. The digital wild west of unbridled innovation without ethical consideration is no longer sustainable. We must collectively build the digital equivalent of guardrails, not just for the sake of abstract ethical principles, but for the very real human beings whose lives are being irrevocably damaged by these synthetic shadows. The echo in the digital hall is a call to action, a reminder that the future we build with AI must be one grounded in human values, respect, and enduring truth.

Conclusion: Reclaiming Truth and Trust in the AI Age

The advent of highly sophisticated generative AI has ushered in an era of unprecedented digital possibilities, yet simultaneously opened a Pandora's Box of profound ethical and societal challenges. The phenomenon of "megan ai sex tape" – whether a specific incident or a generalized concern – stands as a stark and distressing exemplar of how powerful technological advancements can be perverted to inflict severe, non-consensual harm. This pervasive issue of AI-generated non-consensual intimate imagery (NCII) transcends mere technical discussion; it represents a direct assault on individual privacy, a potent form of gendered violence, and a fundamental threat to the very notion of objective truth in our digital world. We have explored the intricate workings of AI models like GANs and diffusion models that make such convincing fabrications possible, highlighting their alarming accessibility. We’ve delved into the devastating psychological and reputational toll on victims, underscoring that the "Megan" phenomenon is not an isolated celebrity mishap but a high-profile symptom of a widespread vulnerability affecting countless ordinary individuals. The legal and regulatory landscape, while scrambling to catch up, remains a complex and often insufficient patchwork of laws, battling the challenges of jurisdiction, definition, and enforcement in a borderless digital realm. However, the future is not predetermined. The fight against AI-generated NCII is being waged on multiple fronts, demonstrating a collective resolve to mitigate this harm. Technological countermeasures, from advanced detection tools to digital watermarking initiatives, are evolving to fight fire with fire. Platforms are increasingly being held accountable, urged to implement robust takedown policies and proactive moderation. Crucially, advocacy groups and support networks provide vital lifelines for victims, offering legal aid and psychological support. Ultimately, fostering widespread public awareness and media literacy stands as our most robust defense, empowering individuals to critically assess digital content and cultivate a culture of digital empathy and responsibility. The "megan ai sex tape" narrative serves as a potent reminder that while AI holds immense promise for positive applications across countless domains, its development and deployment must be inextricably linked with stringent ethical considerations and robust governance frameworks. As we navigate this increasingly synthetic landscape, the collective imperative is clear: we must reclaim truth and rebuild trust. This demands continuous innovation in detection and prevention, comprehensive legislative action, unwavering corporate responsibility, and a fundamental shift in how society interacts with and perceives digital media. Only through a concerted, multi-stakeholder effort can we harness the transformative power of AI for good, while diligently safeguarding individual dignity and the integrity of our shared reality in the digital age.

Character

@Dean17

@Luca Brasil Bots ♡

@Lily Victor

@RedGlassMan

@Notme

@bad bad MalePOV

@The Chihuahua

@Critical ♥

@Zapper

@Mercy

Features

NSFW AI Chat with Top-Tier Models

Experience the most advanced NSFW AI chatbot technology with models like GPT-4, Claude, and Grok. Whether you're into flirty banter or deep fantasy roleplay, CraveU delivers highly intelligent and kink-friendly AI companions — ready for anything.

Real-Time AI Image Roleplay

Go beyond words with real-time AI image generation that brings your chats to life. Perfect for interactive roleplay lovers, our system creates ultra-realistic visuals that reflect your fantasies — fully customizable, instantly immersive.

Explore & Create Custom Roleplay Characters

Browse millions of AI characters — from popular anime and gaming icons to unique original characters (OCs) crafted by our global community. Want full control? Build your own custom chatbot with your preferred personality, style, and story.

Your Ideal AI Girlfriend or Boyfriend

Looking for a romantic AI companion? Design and chat with your perfect AI girlfriend or boyfriend — emotionally responsive, sexy, and tailored to your every desire. Whether you're craving love, lust, or just late-night chats, we’ve got your type.

Featured Content

BLACKPINK AI Nude Dance: Unveiling the Digital Frontier

Explore the controversial rise of BLACKPINK AI nude dance, examining AI tech, ethics, legal issues, and fandom impact.

Billie Eilish AI Nudes: The Disturbing Reality

Explore the disturbing reality of Billie Eilish AI nudes, the technology behind them, and the ethical, legal, and societal implications of deepfake pornography.

Billie Eilish AI Nude Pics: The Unsettling Reality

Explore the unsettling reality of AI-generated [billie eilish nude ai pics](http://craveu.ai/s/ai-nude) and the ethical implications of synthetic media.

Billie Eilish AI Nude: The Unsettling Reality

Explore the disturbing reality of billie eilish ai nude porn, deepfake technology, and its ethical implications. Understand the impact of AI-generated non-consensual content.

The Future of AI and Image Synthesis

Explore free deep fake AI nude technology, its mechanics, ethical considerations, and creative potential for digital artists. Understand responsible use.

The Future of AI-Generated Imagery

Learn how to nude AI with insights into GANs, prompt engineering, and ethical considerations for AI-generated imagery.