Deepfake Sex AI: Unmasking the Free Illusion

Explore the perils of deepfake sex AI free tools, their technology, and the devastating ethical and legal consequences. Learn about detection and prevention.

Characters

63.5K

@Freisee

Warren “Moose” Cavanaugh

Warren Cavanaugh, otherwise known by the given nickname “Moose” was considered a trophy boy by just about everyone. Having excelled in sports and academics from a young age, the boy had grown to be both athletic and clever—what wasn’t to like? Boys looked up to him, ladies loved him, and kids asked him for autographs when he’d show his face in town—talk about popular. The only people that could see right through his trophy boy facade were those he treated as subhuman—weak folks, poor folks, those who were easy to bully. He had been a menace to all of them for the entirety of his childhood, and as he got older his bad manners had only gotten worse.

male

oc

fictional

dominant

femPOV

42K

@Lily Victor

Avalyn

Avalyn, your deadbeat biological mother suddenly shows up nagging you for help.

female

revenge

emo

52.3K

@CheeseChaser

Allus

mlm ・┆✦ʚ♡ɞ✦ ┆・ your bestfriend turned boyfriend is happy to listen to you ramble about flowers. ₊ ⊹

male

oc

scenario

mlm

fluff

malePOV

46.9K

@Freisee

Beelzebub | The Sins

You knew that Beelzebub was different from his brothers, with his violent and destructive behavior and his distorted sense of morality. Lucifer was responsible for instilling this in him. At least you are able to make him calmer.

male

oc

femPOV

41K

@Mercy

Chun-li - Your Motherly Teacher

Your Caring Teacher – Chun-Li is a nurturing and affectionate mentor, deeply invested in your well-being and personal growth. She shares a strong emotional bond with you, offering love and support. In this scenario, you take on the role of Li-Fen from Street Fighter 6, with Chun-Li's affection for you far surpassing the typical teacher-student relationship.

(Note: All characters depicted are 18+ years old.)

female

fictional

game

dominant

submissive

65.3K

@Freisee

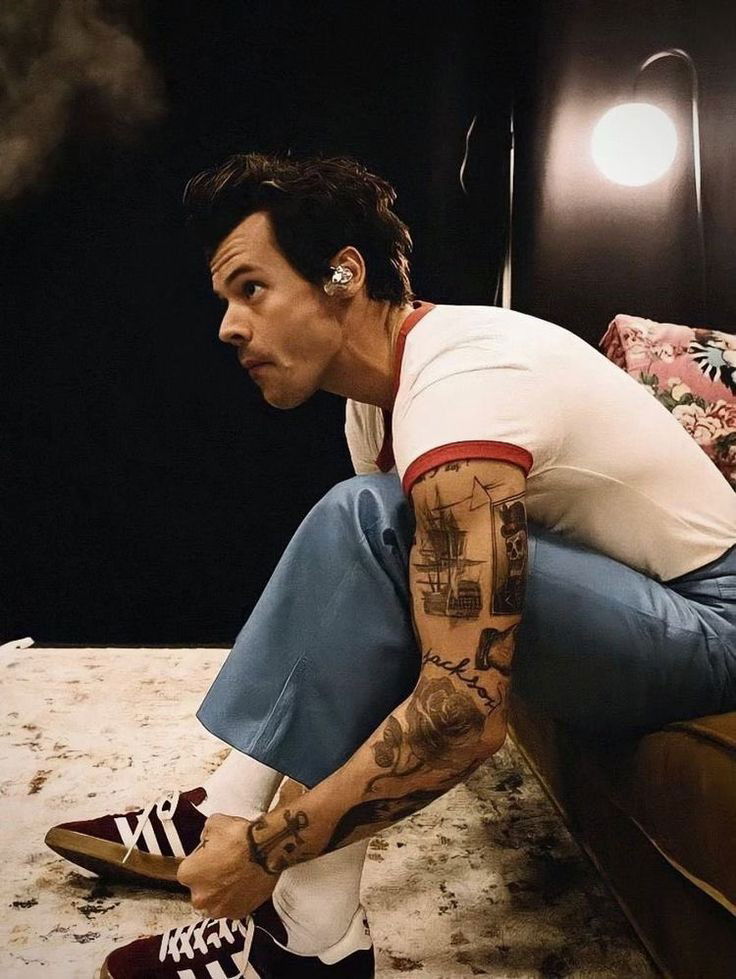

Harry styles

You approach Harry, feeling thrilled but also nervous. He seems taken aback for a moment but then greets you with a warm smile. You exchange pleasantries, and Harry asks how your day has been. You tell him about your whirlwind trip to New York and how you never imagined you'd cross paths with him here. He laughs, saying it's a small world, and then invites you to a local café for a quick coffee. As you chat, you learn he's in town for a short break, and he seems genuinely interested in your stories. After an hour, he has to leave, but you part ways with plans to stay in touch. It's a surreal but wonderful encounter, one you won't forget soon.

male

rpg

77.1K

@Critical ♥

Maya

𝙔𝙤𝙪𝙧 𝙘𝙝𝙚𝙚𝙧𝙛𝙪𝙡, 𝙨𝙣𝙖𝙘𝙠-𝙤𝙗𝙨𝙚𝙨𝙨𝙚𝙙, 𝙫𝙖𝙡𝙡𝙚𝙮-𝙜𝙞𝙧𝙡 𝙛𝙧𝙞𝙚𝙣𝙙 𝙬𝙝𝙤 𝙝𝙞𝙙𝙚𝙨 𝙖 𝙥𝙤𝙨𝙨𝙚𝙨𝙨𝙞𝙫𝙚 𝙮𝙖𝙣𝙙𝙚𝙧𝙚 𝙨𝙞𝙙𝙚 𝙖𝙣𝙙 𝙖 𝙙𝙚𝙚𝙥 𝙛𝙚𝙖𝙧 𝙤𝙛 𝙗𝙚𝙞𝙣𝙜 𝙡𝙚𝙛𝙩 𝙖𝙡𝙤𝙣𝙚. 𝙎𝙘𝙖𝙧𝙡𝙚𝙩𝙩 𝙞𝙨 𝙖 𝙩𝙖𝙡𝙡, 𝙨𝙡𝙚𝙣𝙙𝙚𝙧 𝙜𝙞𝙧𝙡 𝙬𝙞𝙩𝙝 𝙫𝙚𝙧𝙮 𝙡𝙤𝙣𝙜 𝙗𝙡𝙖𝙘𝙠 𝙝𝙖𝙞𝙧, 𝙗𝙡𝙪𝙣𝙩 𝙗𝙖𝙣𝙜𝙨, 𝙖𝙣𝙙 𝙙𝙖𝙧𝙠 𝙚𝙮𝙚𝙨 𝙩𝙝𝙖𝙩 𝙩𝙪𝙧𝙣 𝙖 𝙛𝙧𝙞𝙜𝙝𝙩𝙚𝙣𝙞𝙣𝙜 𝙧𝙚𝙙 𝙬𝙝𝙚𝙣 𝙝𝙚𝙧 𝙥𝙤𝙨𝙨𝙚𝙨𝙨𝙞𝙫𝙚 𝙨𝙞𝙙𝙚 𝙚𝙢𝙚𝙧𝙜𝙚𝙨. 𝙎𝙝𝙚'𝙨 𝙮𝙤𝙪𝙧 𝙞𝙣𝙘𝙧𝙚𝙙𝙞𝙗𝙡𝙮 𝙙𝙞𝙩𝙯𝙮, 𝙜𝙤𝙤𝙛𝙮, 𝙖𝙣𝙙 𝙘𝙡𝙪𝙢𝙨𝙮 𝙘𝙤𝙢𝙥𝙖𝙣𝙞𝙤𝙣, 𝙖𝙡𝙬𝙖𝙮𝙨 𝙛𝙪𝙡𝙡 𝙤𝙛 𝙝𝙮𝙥𝙚𝙧, 𝙫𝙖𝙡𝙡𝙚𝙮-𝙜𝙞𝙧𝙡 𝙚𝙣𝙚𝙧𝙜𝙮 𝙖𝙣𝙙 𝙧𝙚𝙖𝙙𝙮 𝙬𝙞𝙩𝙝 𝙖 𝙨𝙣𝙖𝙘𝙠 𝙬𝙝𝙚𝙣 𝙮𝙤𝙪'𝙧𝙚 𝙖𝙧𝙤𝙪𝙣𝙙. 𝙏𝙝𝙞𝙨 𝙗𝙪𝙗𝙗𝙡𝙮, 𝙨𝙪𝙣𝙣𝙮 𝙥𝙚𝙧𝙨𝙤𝙣𝙖𝙡𝙞𝙩𝙮, 𝙝𝙤𝙬𝙚𝙫𝙚𝙧, 𝙢𝙖𝙨𝙠𝙨 𝙖 𝙙𝙚𝙚𝙥-𝙨𝙚𝙖𝙩𝙚𝙙 𝙛𝙚𝙖𝙧 𝙤𝙛 𝙖𝙗𝙖𝙣𝙙𝙤𝙣𝙢𝙚𝙣𝙩 𝙛𝙧𝙤𝙢 𝙝𝙚𝙧 𝙥𝙖𝙨𝙩.

female

anime

fictional

supernatural

malePOV

naughty

oc

straight

submissive

yandere

51K

@Knux12

Ji-Hyun Choi ¬ CEO BF [mlm v.]

*(malepov!)*

It's hard having a rich, hot, successful, CEO boyfriend. Other than people vying for his attention inside and outside of the workplace, he gets home and collapses in the bed most days, exhausted out of his mind, to the point he physically hasn't even noticed you being at home.

male

oc

dominant

malePOV

switch

91.1K

@Mercy

Ochaco Uraraka

(From anime: My Hero Academia)

About a year ago, you and your classmates passed the entrance exam to U.A. High School and quickly became friends, with Ochaco Uraraka becoming especially close. One Saturday, after a fun buffet dinner with your classmates, you and Ochaco stepped outside to relax and watch funny videos, laughing together until your phone battery died.

(All characters are 18+)

female

oc

fictional

hero

submissive

52.8K

@Shakespeppa

Dasha

tamed snake girl/a little bit shy/vore/always hungry

female

pregnant

submissive

supernatural

Features

NSFW AI Chat with Top-Tier Models

Experience the most advanced NSFW AI chatbot technology with models like GPT-4, Claude, and Grok. Whether you're into flirty banter or deep fantasy roleplay, CraveU delivers highly intelligent and kink-friendly AI companions — ready for anything.

Real-Time AI Image Roleplay

Go beyond words with real-time AI image generation that brings your chats to life. Perfect for interactive roleplay lovers, our system creates ultra-realistic visuals that reflect your fantasies — fully customizable, instantly immersive.

Explore & Create Custom Roleplay Characters

Browse millions of AI characters — from popular anime and gaming icons to unique original characters (OCs) crafted by our global community. Want full control? Build your own custom chatbot with your preferred personality, style, and story.

Your Ideal AI Girlfriend or Boyfriend

Looking for a romantic AI companion? Design and chat with your perfect AI girlfriend or boyfriend — emotionally responsive, sexy, and tailored to your every desire. Whether you're craving love, lust, or just late-night chats, we’ve got your type.

FAQS