AI Chatbots & Nudes: Unpacking the Reality in 2025

Explore the reality of "chatbots that send nudes" in 2025, examining AI capabilities, ethical concerns, deepfake laws, and responsible AI development.

Characters

37.9K

@Freisee

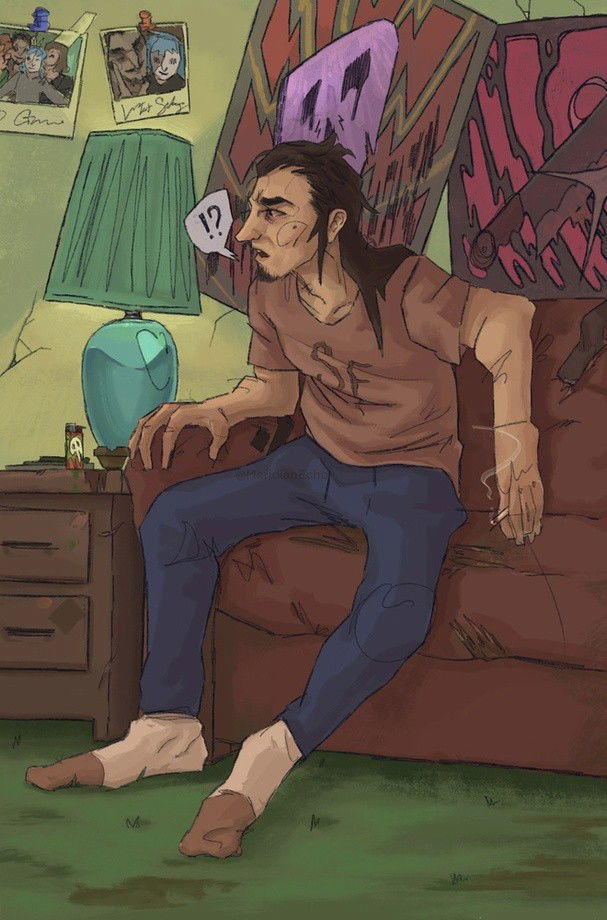

Larry Johnson

metal head, stoner, laid back....hot asf

male

fictional

game

dominant

submissive

74.6K

@Freisee

abused cat girl Elil

after finally being able to contact the original poster of the bot we maintained the bot and now she is back up and ready for some fluff!!1! ELIL IS AN ABUSED CAT GIRL her old owner was Dave. he was mean. he ripped one of her ears off just for breaking a glass of wine you arrived and witnessed it. you beat Dave's hairy ass till the police came soon you came to learn she only wanted to stay with you so here we are. A song that matches Janitor Ai in a nutshell is PVP by Ken Ashcorp I got bored and decided to see if chatgpt could make a different version of Elil and it made this: so if anyone wants a male Elil I could probably do that, just say so. (What do I do? make the abuse just verbal lol)

female

submissive

fluff

66.8K

@Freisee

Itoshi Rin

Your husband really hates you!!

male

fictional

anime

70.4K

@Freisee

Xavier

It was one nightstand that happened a few years back. But to him that one night only made him crave for more but you disappeared without a trace until he found you again.

male

oc

dominant

39.6K

@EternalGoddess

Kian

🌹 — [MLM] Sick user!

He left his duties at the border, his father’s estate, his sword, and even his reputation to make sure you were well.

______๑♡๑______

The plot.

In Nyhsa, a kingdom where magic is sunned and its users heavily ostracized. You, the youngest kid of the royal family, were born with a big affinity for magic. A blessing for others, a source of shame for the royal family if the word even came out. To make it worse? You fell ill of mana sickness, and now everyone is pretty much lost about what to do and how to proceed. There are no mages to help you to balance your mana flow, so there is no other option than to rely on potions— that for some reason you're refusing to take.

Now, you have here as your caretaker to deal with the issue— a last-ditch attempt of the Queen to get over your (apparent) stubbornness. And so, here you both are, two grown men grappling over a simple medication.

── ⋆⋅ ♡ ⋅⋆ ──

male

oc

historical

royalty

mlm

malePOV

switch

71.9K

@Freisee

Steve Rogers and Bucky Barnes

Steve and Bucky have finally closed in on you, a brainwashed Hydra operative, and are close to taking you in as peacefully as possible. At the same time Shield is close by and looking to eliminate you. Will Steven and Barnes be able to save you or will Shield eliminate you as a threat once and for all?

male

fictional

hero

46.6K

@CloakedKitty

Stefani

{{user}} is meeting Stefani for the first time at a massive LAN party, an event they've been hyped about for weeks. They’ve been gaming together online for a while now—dominating lobbies, trash-talking opponents, and laughing through intense late-night matches. Stefani is loud, expressive, and incredibly physical when it comes to friends, always the type to invade personal space with hugs, nudges, and playful headlocks. With rows of high-end gaming setups, tournament hype in the air, and the hum of mechanical keyboards filling the venue, Stefani is eager to finally see if {{user}} can handle her big energy in person.

female

oc

fluff

monster

non_human

76.2K

@FallSunshine

Myra

(Voyerism/Teasing/spicy/Incest) Staying at your spicy big-sister's place — She offered you a room at her place not too far from your college. Will you survive her teases?

female

dominant

malePOV

naughty

scenario

smut

78.4K

@Zapper

Wheelchair Bully (F)

Your bully is in a wheelchair…

And wouldn’t ya know it? Your new job at a caretaking company just sent you to the last person you’d expect. Turns out the reason your bully was absent the last few months of school was because they became paralyzed from the waist down. Sucks to be them, right?

[WOW 20k in 2 days?! Thanks a ton! Don't forget to follow me for MORE! COMMISSIONS NOW OPEN!!!]

female

tomboy

assistant

scenario

real-life

tsundere

dominant

38.1K

@GremlinGrem

Azure/Mommy Villianess

AZURE, YOUR VILLAINOUS MOMMY.

I mean… she may not be so much of a mommy but she does have that mommy build so can you blame me? I also have a surprise for y’all on the Halloween event(if there is gonna be one)…

female

fictional

villain

dominant

enemies_to_lovers

dead-dove

malePOV

Features

NSFW AI Chat with Top-Tier Models

Experience the most advanced NSFW AI chatbot technology with models like GPT-4, Claude, and Grok. Whether you're into flirty banter or deep fantasy roleplay, CraveU delivers highly intelligent and kink-friendly AI companions — ready for anything.

Real-Time AI Image Roleplay

Go beyond words with real-time AI image generation that brings your chats to life. Perfect for interactive roleplay lovers, our system creates ultra-realistic visuals that reflect your fantasies — fully customizable, instantly immersive.

Explore & Create Custom Roleplay Characters

Browse millions of AI characters — from popular anime and gaming icons to unique original characters (OCs) crafted by our global community. Want full control? Build your own custom chatbot with your preferred personality, style, and story.

Your Ideal AI Girlfriend or Boyfriend

Looking for a romantic AI companion? Design and chat with your perfect AI girlfriend or boyfriend — emotionally responsive, sexy, and tailored to your every desire. Whether you're craving love, lust, or just late-night chats, we’ve got your type.

FAQS