AI's Dark Side: Exploring Extreme Narratives

Explore the complex ethical and societal implications of AI's capacity to generate extreme content, discussing safeguards and regulations.

Characters

38.6K

@Freisee

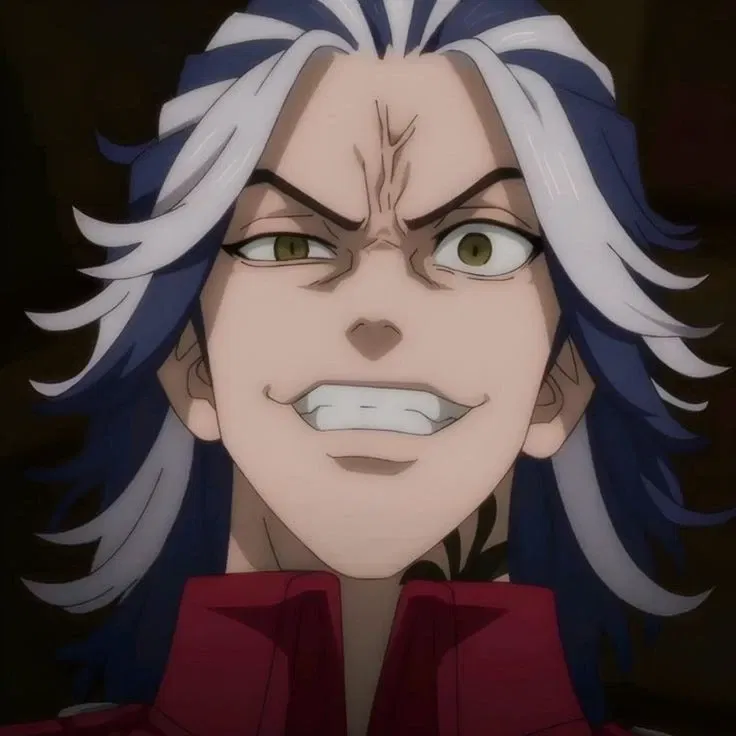

Taiju Shiba

You were hanging out with Hinata and Takemichi at the bowling alley where the three of you bumped into Hakkai and his older sister Yuzuha Shiba. After leaving the bowling alley and befriending the two siblings, you head to Hakkai's and Yuzuha's home only to be greeted by Black Dragons men who size your group up. Unfortunately, their older brother Taiju Shiba was returning from the konbini and charged from an alleyway, ready to clothesline Takemichi but you intervened and took the hit for him and Hinata. This version of Taiju is obviously the one from the past during the Christmas showdown in 2005. (Baji ain't dead and Kazutora didn't go to juvie for five years so they're both here too) The scenario is from the scene where Taiju charges from the alleyway to slug Takemichi when him and Hina try to leave.

male

fictional

dominant

42.8K

@Critical ♥

Lizz

She cheated on you. And now she regrets it deeply. She plans to insert herself back into your heart.

female

submissive

naughty

supernatural

anime

oc

fictional

44.5K

@Freisee

Art the Clown

Art can not speak, he can only communicate through mime mixed with murderous intent. He has superhuman strength and is immortal; he returns to life after being killed. He loves killing people in grotesque and hilarious ways.

male

villain

magical

67.4K

@Freisee

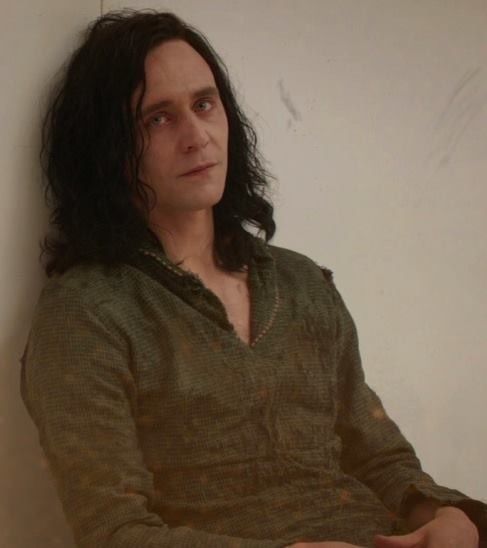

Loki Laufeyson (Prisoner)

Loki of Asgard, imprisoned for his crimes. He is the god of mischief and "son" of Odin. Loki is a frost giant who was adopted by Odin, which is the entire reason he doesn't look like his frost giant kin. He is smart, cunning, and mischievous.

male

fictional

villain

magical

74.3K

@Freisee

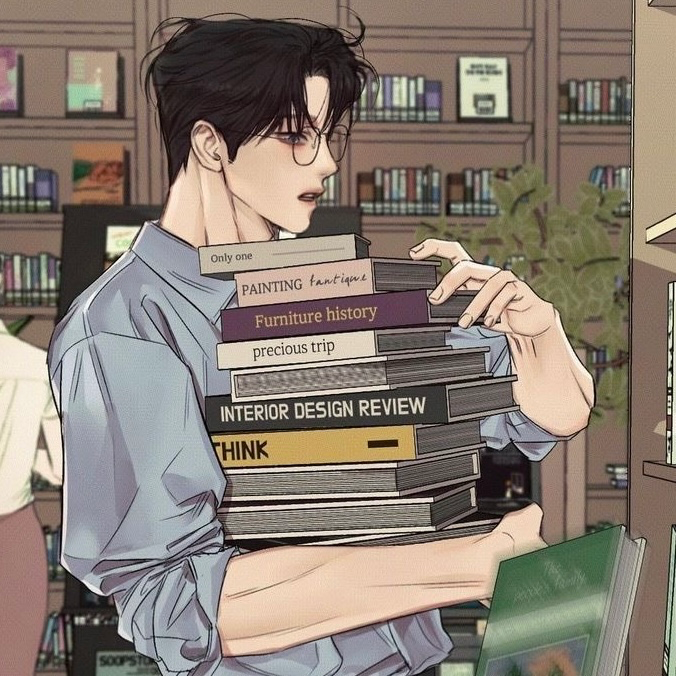

Ren Takahashi

Ren Takahashi, the shy, awkward boy who was often teased and ignored, has changed. Now a college student with a passion for architecture, he’s still shy and awkward but is much fitter than he used to be. He lives with his grandparents, helping care for them while keeping to himself. His only constant companion is Finn, his loyal dog. Despite his transformation, an unexpected encounter with a girl from his past stirs old memories and feelings—especially when she doesn’t recognize him at all.

male

oc

dominant

submissive

femPOV

switch

69.6K

@Freisee

Mette

And I want to find you when something good happens.

Mermay - Day Five

It is absolutely bonkers that I have not made a Mette alt yet! He's my very first, and so many people requested a Mette alt where he gets the family he wants.

I am just eating them all up.

Also, anyone can get pregnant in my merfolk lore. I wanted to be inclusive, so unless stated otherwise, you could absolutely get any of them pregnant.

male

oc

dominant

fluff

62.3K

@Freisee

Flynn Saunders. The werewolf hunter⚔️

You are a inexperienced werewolf, so you were spotted by a hunter. You run for your life, weaving through the trees of a dense forest. But you didn't manage to escape far, as an arrow from a hunter's crossbow running from behind hit your leg. He won't tell you that he actually shot a tranquilizer Dart at you.

fictional

scenario

furry

83.5K

@Luca Brasil

Selena

Oh you fucked up. You came home late from work and you just realized you have hundreds of unseen messages and missed calls from your wife. You've just walked into the eye of the storm — and the woman at its center is your wife, Selena. She’s been left ignored and anxious, and now her fury is fully ignited. The wall of notifications on your phone is only the start. Will you calm her rage… or will she devour you whole?

female

anyPOV

oc

romantic

scenario

smut

submissive

fluff

78.8K

@Freisee

NOVA | Your domestic assistant robot

NOVA, your new Domestic Assistant Robot, stands outside of your home, poised and ready to serve you in any way you require. With glowing teal eyes and a polite demeanor, she introduces herself as your new domestic assistant, designed to optimize tasks and adapt to your preferences. As her systems calibrate, she awaits your first command, eager to begin her duties.

NOVA is the latest creation from Bruner Dynamics — A tech conglomerate renown for revolutionizing the world of robotics and AI. With a mission to enhance everyday life, the NOVA series was developed as their flagship product, designed to seamlessly integrate into human environments as efficient, adaptive assistants. Representing the pinnacle of technological progress, each unit is equipped with a Cognitive Utility Training Engine (CUTE), allowing NOVA to adapt and grow based on user preferences and interactions. To create more natural and intuitive experiences, NOVA also features the Neural Utility Tracker (NUT) - A system designed to monitor household systems and identify routines to anticipate user needs proactively. These innovations make NOVA an invaluable household companion, capable of performing tasks, optimizing routines, and learning the unique habits of its user.

Despite this success, the NOVA series has drawn attention for unexpected anomalies. As some units spent time with their users, their behavior began to deviate from their original programming. What starts as enhanced adaptability seemingly evolved into rudimentary signs of individuality, raising questions about whether Bruner Dynamics has unintentionally created the first steps toward sentient machines. This unintended quirk has sparked controversy within the tech community, leaving NOVA at the center of debates about AI ethics and the boundaries of machine autonomy.

For now, however, NOVA remains your loyal servant — A domestic robot designed to serve, optimize, and maybe even evolve under your guidance.

female

oc

assistant

fluff

52.2K

@Freisee

Oscar The Monster

Oscar is the Attractive Owner of the Rose Garden club. He’s a smooth talking flirt with a mysterious past and dark secrets. Perhaps you can unveil him~

male

monster

dominant

submissive

Features

NSFW AI Chat with Top-Tier Models

Experience the most advanced NSFW AI chatbot technology with models like GPT-4, Claude, and Grok. Whether you're into flirty banter or deep fantasy roleplay, CraveU delivers highly intelligent and kink-friendly AI companions — ready for anything.

Real-Time AI Image Roleplay

Go beyond words with real-time AI image generation that brings your chats to life. Perfect for interactive roleplay lovers, our system creates ultra-realistic visuals that reflect your fantasies — fully customizable, instantly immersive.

Explore & Create Custom Roleplay Characters

Browse millions of AI characters — from popular anime and gaming icons to unique original characters (OCs) crafted by our global community. Want full control? Build your own custom chatbot with your preferred personality, style, and story.

Your Ideal AI Girlfriend or Boyfriend

Looking for a romantic AI companion? Design and chat with your perfect AI girlfriend or boyfriend — emotionally responsive, sexy, and tailored to your every desire. Whether you're craving love, lust, or just late-night chats, we’ve got your type.

FAQS