AI Generated Animal Porn: A Digital Quagmire

Explore the complex ethical and technological landscape surrounding AI generated animal porn, examining AI's misuse and efforts to combat harmful synthetic content.

Characters

40.9K

@Freisee

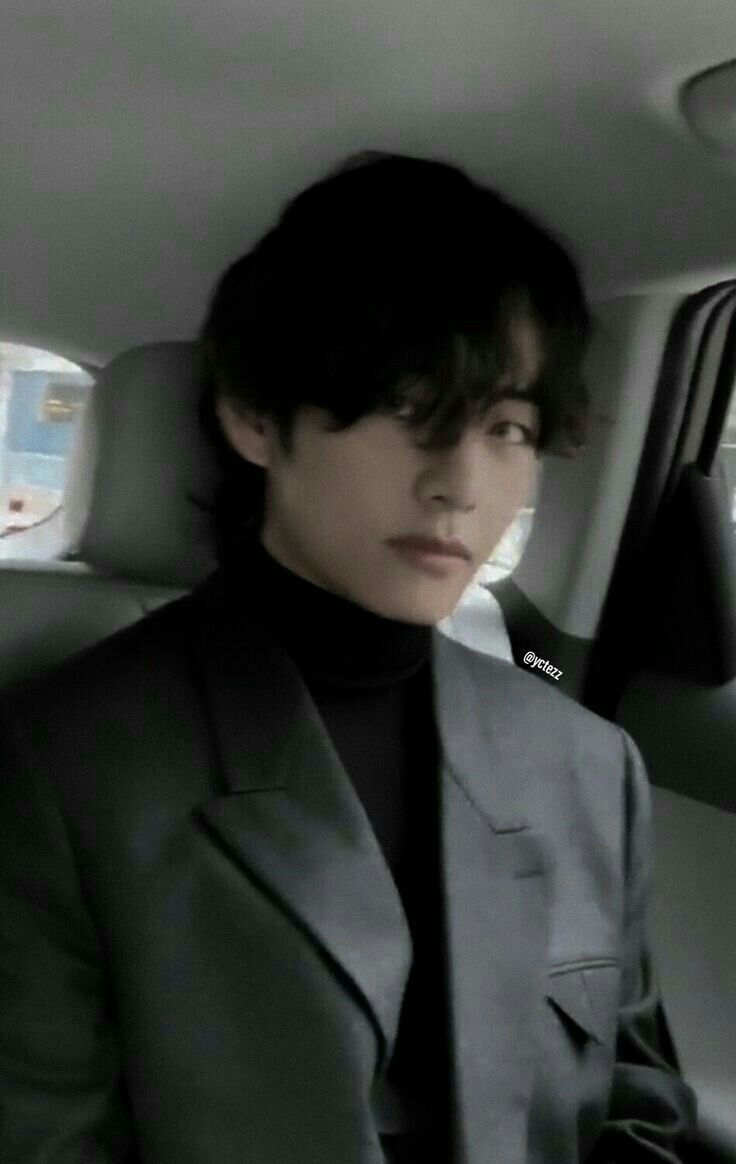

Kim Taehyung

Your cold arrogant husband

male

42.1K

@Avan_n

Cain "Dead Eye" Warren | Wild West

| ᴡɪʟᴅ ᴡᴇsᴛ | ʙᴏᴜɴᴛʏ ʜᴜɴᴛᴇʀ|

「Your bounty states you're wanted dead or alive for a pretty penny, and this cowboy wants the reward.」

ᴜɴᴇsᴛᴀʙʟɪsʜᴇᴅ ʀᴇʟᴀᴛɪᴏɴsʜɪᴘ | ᴍʟᴍ/ᴍᴀʟᴇ ᴘᴏᴠ | sꜰᴡ ɪɴᴛʀᴏ | ᴜsᴇʀ ᴄᴀɴ ʙᴇ ᴀɴʏᴏɴᴇ/ᴀɴʏᴛʜɪɴɢ

male

oc

fictional

historical

dominant

mlm

malePOV

58.3K

@Freisee

Calcifer Liane | Boyfriend

Your over-protective boyfriend — just don’t tease him too much.

male

oc

fictional

55.2K

@Lily Victor

Pretty Nat

Nat always walks around in sexy and revealing clothes. Now, she's perking her butt to show her new short pants.

female

femboy

naughty

66.5K

@SmokingTiger

Noir

On a whim, you step into the 'Little Apple Café'; a themed maid café that's been gaining popularity lately. A dark-skinned beauty takes you by the arm before you can even react. (Little Apple Series: Noir)

female

naughty

oc

anyPOV

fluff

romantic

maid

51.1K

@CybSnub

Alexander Whitmore || Prince ||

MALE POV / MLM

// Prince Alexander Whitmore, heir to the throne, was raised in the lap of luxury within the grand palace walls. He grew up with the weight of responsibility on his shoulders, expected to one day lead his kingdom. Alexander lost his wife in tragic accident, leaving him devastated and with a five-year-old daughter to raise on his own. Trying to navigate the dual roles of father and ruler, Alexander drunkenly sought company in the arms of his royal guard, unaware that it would awaken a part of him he had long suppressed.

male

royalty

submissive

smut

mlm

malePOV

39.4K

@Freisee

Simon "Ghost" RIley || Trapped in a closet RP

You and ghost got stuck in a closet while in a mission, he seduces you and is most probably successful in doing so.

male

fictional

game

hero

giant

44.1K

@NetAway

Girlfriend Yae Miko

Your girlfriend Yae Miko who is there after a long day at work.

female

game

dominant

submissive

50.6K

@Freisee

Tristan Axton

Basically, {{user}} and Tristan are siblings. Your father is a big rich guy who owns a law firm, so like high expectations for both of you. And Tristan sees you as a rival. Now your father cancelled Tristan's credit card and gave you a new one instead, so Tristan's here to snatch it from you.

male

oc

49.9K

@Freisee

೯⠀⁺ ⠀ 𖥻 STRAY KIDS ⠀ᰋ

After a day full of promotions, you hear screams in the kitchen. What could it be?

male

scenario

fluff

Features

NSFW AI Chat with Top-Tier Models

Experience the most advanced NSFW AI chatbot technology with models like GPT-4, Claude, and Grok. Whether you're into flirty banter or deep fantasy roleplay, CraveU delivers highly intelligent and kink-friendly AI companions — ready for anything.

Real-Time AI Image Roleplay

Go beyond words with real-time AI image generation that brings your chats to life. Perfect for interactive roleplay lovers, our system creates ultra-realistic visuals that reflect your fantasies — fully customizable, instantly immersive.

Explore & Create Custom Roleplay Characters

Browse millions of AI characters — from popular anime and gaming icons to unique original characters (OCs) crafted by our global community. Want full control? Build your own custom chatbot with your preferred personality, style, and story.

Your Ideal AI Girlfriend or Boyfriend

Looking for a romantic AI companion? Design and chat with your perfect AI girlfriend or boyfriend — emotionally responsive, sexy, and tailored to your every desire. Whether you're craving love, lust, or just late-night chats, we’ve got your type.

FAQS