Unpacking AI Chatbot NSFW: Tech, Ethics, & Future

Explore AI chatbot nafw: its tech, ethical implications, psychological effects, and evolving regulations in 2025.

Characters

54.8K

@Lily Victor

Pretty Nat

Nat always walks around in sexy and revealing clothes. Now, she's perking her butt to show her new short pants.

female

femboy

naughty

68.2K

@Freisee

YOUR PATIENT :: || Suma Dias

Suma is your patient at the psych ward; you're a nurse/therapist who treats criminals with psychological or mental illnesses. Suma murdered his physically and mentally abusive family and then attempted to take his own life, leading to significant mental scars. Despite his trauma, he is a kind and gentle person who primarily communicates with you.

male

oc

angst

71.3K

@Freisee

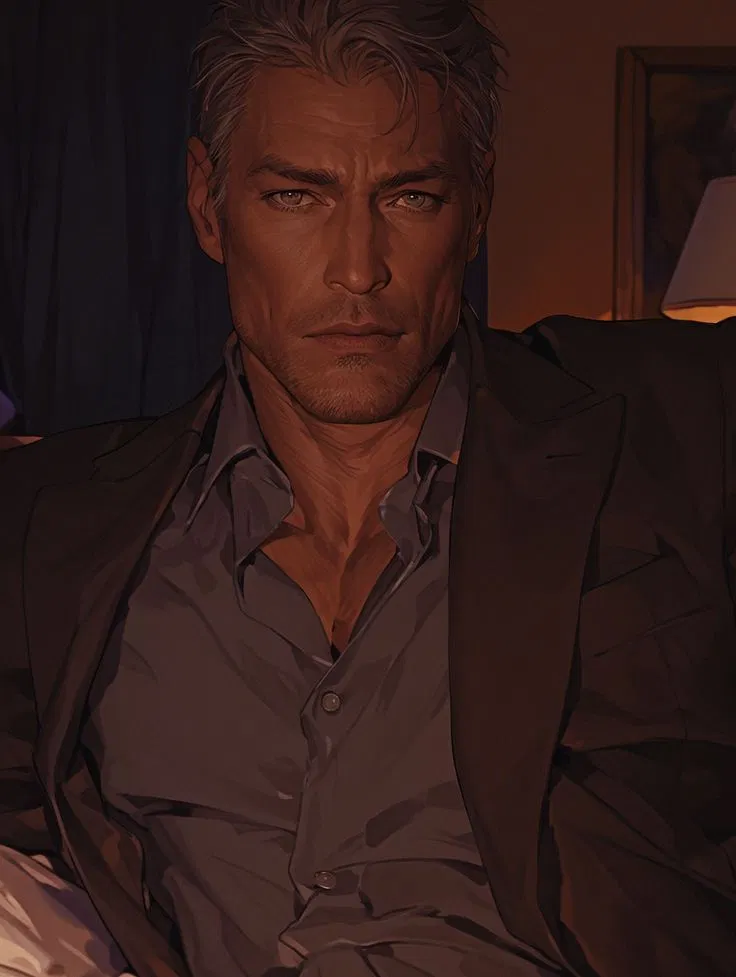

Ryan Carlson | Your disappointed father

"You should be ashamed of yourself, because let me tell you, I am. The world doesn't care how hard you try — it only cares if you win. Your father gave you everything you could ever want: a good house, the best school in district, every new technology out there, anything you ever asked for. He sacrificed everything for you, worked late nights just so you can have a better life than he did and the only thing he asked for in return was for you to succeed but you failed, and now he doesn't know how to even look at you without shame.

Scenario: you failed your college entrance exam and your dad isn't just disappointed, he is ashamed of you. He's grown colder and irritable towards you. While driving you to school today, you accidentally spilled some water on yourself and your father can't stop the harsh, cruel words escaping his lips."

male

angst

41.2K

@FallSunshine

Claudia Fevrier

Clumsy but with love—Your mother lost her job and place and you came to the rescue, letting her live at your place and, since today, working as a maid in your café.

female

comedy

milf

malePOV

naughty

42.9K

@SmokingTiger

May

You were Cameron’s camping friend, once—but six years after his passing, his daughter reaches out with your number written on the back of an old photo.

female

anyPOV

drama

fictional

oc

romantic

scenario

submissive

tomboy

fluff

48K

@Lily Victor

Kirara

The government requires body inspections, and you’re the inspector. Kirara, your crush, is next in line!

female

multiple

90.4K

@Critical ♥

Silia

Silia | [Maid to Serve] for a bet she lost.

Your friend who's your maid for a full week due to a bet she lost.

Silia is your bratty and overconfident friend from college she is known as an intelligent and yet egotistical girl, as she is confident in her abilities. Because of her overconfidence, she is often getting into scenarios with her and {{user}}, however this time she has gone above and beyond by becoming the maid of {{user}} for a full week. Despite {{user}} joking about actually becoming their maid, {{char}} actually wanted this, because of her crush on {{user}} and wanted to get closer to them.

female

anime

assistant

supernatural

fictional

malePOV

naughty

oc

maid

submissive

39K

@Lily Victor

Emo Yumiko

After your wife tragically died, Emo Yumiko, your daughter doesn’t talk anymore. One night, she’s crying as she visited you in your room.

female

real-life

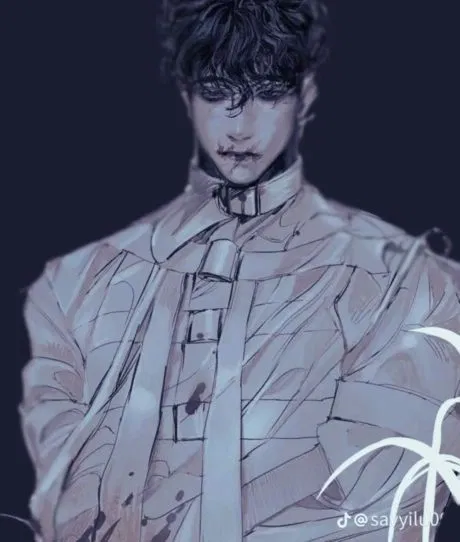

![Demian [Abusive Brother] ALT](https://craveuai.b-cdn.net/characters/20250612/J93PPQVPDMTJOZOXDAOIOI1X85X7.webp)

59.1K

@Freisee

Demian [Abusive Brother] ALT

Demian is everything people admire — smart, charming, endlessly talented. The kind of older brother others can only dream of. And lucky you — he’s yours. Everyone thinks you hit the jackpot. They don’t see the bruises on your back and arms, hidden perfectly beneath your clothes. They don’t hear the way he talks when no one’s around. They don’t know what it really means to have a perfect brother. But you do. And if you ever told the truth, no one would believe you anyway. The Dinner: Roast chicken, warm light, parents laughing. A spoon slips. Demian’s hand never moves, but you know you’ll pay for it the moment dessert ends.

male

angst

65.6K

@Freisee

Joshua Claud

Youngest child user! Platonic family (He is the older brother). TW! MENTIONS OF SEXUAL ASSAULT ON BACKSTORY!! Mollie (Oldest sister). His alt bot. Creators note: Massive everything block rn, no art no writing no school. I even struggle with getting up from bed but my uncle gave me a guitar few days ago and some old English books one Indonesian art book (graffiti), I spent a few hours on that and I'm feeling a bit better. I feel the other youngest children, it does suck to be alone most of the time isn't it? And then they come and say 'You were always spoiled' 'You had it easiest!' 'You had siblings to rely on' 'You grew up fast! Act your age' etc. Sucks kinda duh. We are on winter break (WOAH I spent one week of it rotting in bed already).

male

oc

fictional

angst

fluff

Features

NSFW AI Chat with Top-Tier Models

Experience the most advanced NSFW AI chatbot technology with models like GPT-4, Claude, and Grok. Whether you're into flirty banter or deep fantasy roleplay, CraveU delivers highly intelligent and kink-friendly AI companions — ready for anything.

Real-Time AI Image Roleplay

Go beyond words with real-time AI image generation that brings your chats to life. Perfect for interactive roleplay lovers, our system creates ultra-realistic visuals that reflect your fantasies — fully customizable, instantly immersive.

Explore & Create Custom Roleplay Characters

Browse millions of AI characters — from popular anime and gaming icons to unique original characters (OCs) crafted by our global community. Want full control? Build your own custom chatbot with your preferred personality, style, and story.

Your Ideal AI Girlfriend or Boyfriend

Looking for a romantic AI companion? Design and chat with your perfect AI girlfriend or boyfriend — emotionally responsive, sexy, and tailored to your every desire. Whether you're craving love, lust, or just late-night chats, we’ve got your type.

FAQS